Adobe Firefly 3 debuts with lifelike AI image generation, challenging DALL-E 3 and Midjourney.

The Adobe Firefly family of generative AI models has a less-than-stellar reputation among creatives.

Particularly in comparison to Midjourney, OpenAI’s DALL-E 3, and other competitors, the Firefly image-generation model has been criticized as lackluster and defective, as it has a propensity to distort landscapes and limbs and overlook the nuances of prompts. However, this week at the company’s Max London conference, Adobe is attempting to right the ship with the release of Firefly Image 3, its third-generation model.

The model, which is presently accessible in Photoshop (beta) and Adobe Firefly web app, generates imagery that is more “realistic” in nature compared to its predecessors (Image 1 and Image 2). This is due to the model’s enhanced lighting and text-generation capabilities and its capacity to comprehend lengthier, more complex prompts and scenes.

Adobe claims to render elements such as typography, iconography, raster images, and line art with greater precision. Furthermore, it exhibits “considerable” improvement in the ability to represent crowded areas and individuals with “detailed features” and “a range of emotions and expressions.”

In my fleeting, unscientific evaluation, Image 3 is an improvement over Image 2.

I was unable to attempt Image 3 personally. However, Adobe PR provided a few model outputs and prompts; I obtained samples of the Image 3 outputs by running those prompts through Image 2 on the web. (Remember that the outputs of Image 3 might have been selected randomly.)

When comparing the illumination in Image 3’s headshot to the one below it in Image 2, one can observe the difference:

In my opinion, Image 3’s output appears more realistic, and detailed contrast and shadowing are significantly more pronounced than in the Image 2 sample.

The following images illustrate the application of scene comprehension in Image 3:

The Image 2 instance is relatively simplistic compared to the output of Image 3, both in terms of detail level and overall expressiveness. The subject in the shirt sample from Image 3 exhibits some wriggling (around the midsection), but the pose is more complex than the subject in Image 2. (Additionally, the clothing in Image 2 is a touch-off.)

Image 3 has undoubtedly benefited from a greater variety and a more extensive training set.

Like Image 2 and Image 1, Image 3 is trained using licensed and public domain content for which the copyright has elapsed and uploaded to Adobe Stock, Adobe’s royalty-free media library. Adobe Stock is continuously expanding, and as a result, the available training dataset also expands.

To mitigate legal risks and establish itself as a more “ethical” substitute for generative AI providers that arbitrarily train on images (e.g., OpenAI, Midjourney), Adobe has implemented a compensation program for contributors to the training dataset via Adobe Stock. (However, the program’s terms are somewhat ambiguous.) Adobe also trains Firefly models on AI-generated images, which some consider to be a form of data laundering, which is controversial.

A concerning development has come to light as Bloomberg recently reported that training data for Firefly image-generating models does not exclude AI-generated images from Adobe Stock. This is because those images may contain regurgitated copyrighted material.

Adobe has defended the practice, asserting that AI-generated images constitute a negligible proportion of its training data. They are subject to moderation to exclude references to artists’ names, trademarks, or recognizable characters.

Content filters and other safeguards, including diverse and more “ethically” sourced training data (see users generating individuals flipping the bird with Image 2), cannot ensure an entirely flawless experience. Once Image 3 becomes available to the public, it will be properly evaluated.

New Features Powered by AI

Image 3 drives some new Photoshop features and the enhanced text-to-image function.

The inclusion of a novel “style engine” and an additional auto-stylization toggle in Image 3 enables the model to produce a wider variety of subject poses, backgrounds, and hues. They contribute to the Reference Image, a setting that allows users to instruct the model to generate content by a specific tone or color scheme of a given image.

To execute precise image alterations, three new generative tools utilize image 3—Generate Background, Generate Similar, and Enhance Detail. Generate Background (self-descriptive) substitutes a generated background for an existing one that merges with the scene. In contrast, Generate Similar provides variations on a specified portion of a photograph (e.g., an object or person). Regarding Enhance Detail, it “fine-tunes” images to increase their clarity and sharpness.

These features have been in beta for the Firefly web application for at least one month (and Midjourney for an extended period). This signifies their beta debut with Photoshop.

Regarding the web application, Adobe is not disregarding this alternative approach to its AI tools.

Structure Reference and Style Reference are being added to the Firefly web application in tandem with the release of Image 3. Adobe describes these features as “advancements in creative control.” (Although both were introduced in March, they are only now becoming widely accessible.) Users can generate new images with Structure Reference that correspond to the “structure” of a reference image, such as a head-on view of a race car.

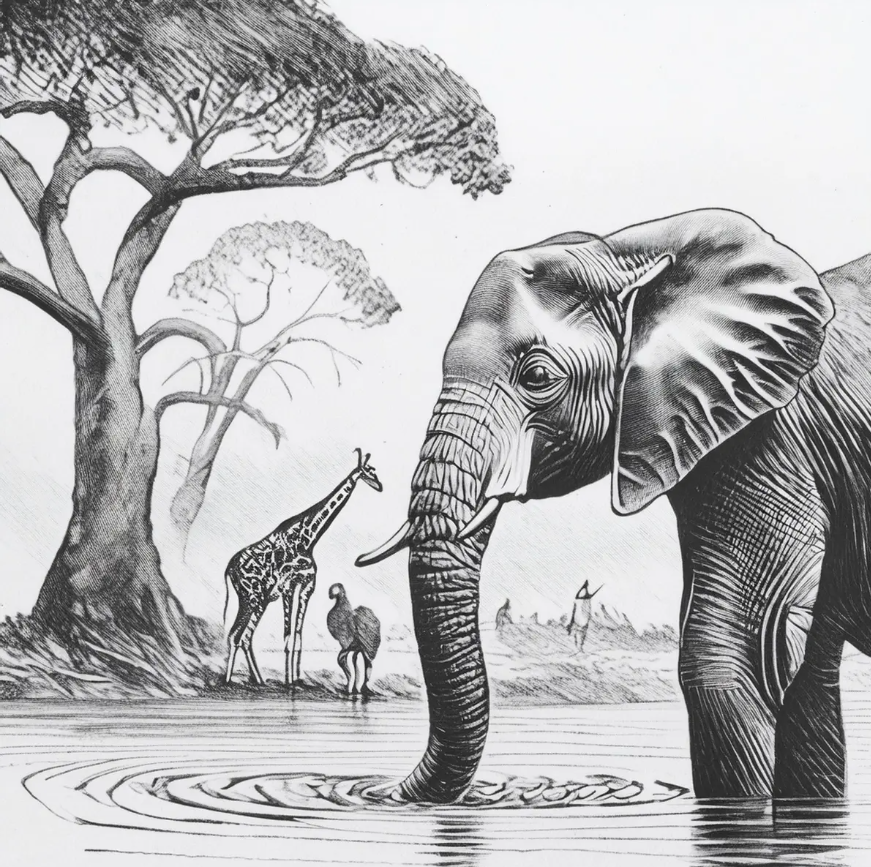

By emulating a target image’s style (e.g., pencil sketch) while preserving the content (e.g., elephants in an African expedition), Style Reference is essentially style transfer by another name.

An example of Structure Reference at work:

I inquired whether Adobe would alter the pricing structure for Firefly image generation in light of the numerous enhancements. At present, Firefly’s most affordable premium plan costs $4.99 per month, which is lower than rivals such as OpenAI ($20 per month) and Midjourney ($10 per month), which require a ChatGPT Plus subscription to access DALL-E 3.

Adobe stated that its present tiers and generative credit system will remain in effect. The company added that Adobe’s indemnity policy, which guarantees payment for copyright claims associated with works produced in Firefly, and its method for watermarking AI-generated content will remain unchanged.

Content Credentials, which serve as metadata to identify media generated by artificial intelligence, will remain automatically appended to all Firefly image generations on the web and in Photoshop. This also applies to images generated partially edited using generative features or generated from inception.