AI-Enhanced Fraud Detection in Crypto Exchanges uses machine learning to spot suspicious activity faster and safeguard traders from evolving threats

- 1 Introduction

- 2 The threat landscape exchanges face in 2024–2025

- 3 Core AI techniques used by exchanges

- 4 Data: The Fuel and the Friction

- 5 Real-world architecture: how exchanges stitch AI into operations

- 6 Case Studies & Mini Post-Mortems

- 7 Risks, Blind Spots, and the Adversarial Arms Race

- 8 Compliance, Policy, and Global Coordination

- 9 Conclusion

-

10

FAQ

- 10.1 What is AI-enhanced fraud detection and how is it different from rules-based monitoring?

- 10.2 Can AI completely stop exchange hacks?

- 10.3 How do GNNs detect money laundering?

- 10.4 What privacy risks come with AI monitoring?

- 10.5 How do regulators view AI-based detection?

- 10.6 Are AI systems prone to false positives?

Introduction

By 2025, the headline thefts forced platforms to rethink detection — AI is now essential, not optional. Staggering losses have rocked the crypto industry recently: more than $2 billion in cryptocurrency was stolen by hackers in the first half of 2025, according to the blockchain security firm Chainalysis.

Most of the total comes from the $1.5 billion stolen from Dubai-based crypto platform Bybit in February by hackers connected to North Korea.

The $2.17 billion stolen so far this year already surpasses the losses seen in all of 2024, and is the highest number seen in the first six months of a year since the company began tracking the figures in 2022.

Chainalysis estimates that up to $4 billion worth of cryptocurrency may be stolen by the end of the year.

Meanwhile, global illicit on-chain activity, including scams, stolen funds, and sanctions evasion, may exceed $51 billion in 2024

Faced with this surge, exchange operators cannot afford to treat fraud detection as a checkbox—it must be AI-Enhanced Fraud Detection in Crypto Exchanges.

In this article, you’ll learn how AI-Enhanced Fraud Detection in Crypto Exchanges is rapidly transforming the battleground against theft and scam. Once a “nice-to-have,” AI tools are now vital in spotting anomalous patterns, thwarting complex scam chains, and safeguarding billions in assets.

The threat landscape exchanges face in 2024–2025

AI-Enhanced Fraud Detection in Crypto Exchanges is no longer merely a proactive advantage—exchanges are deploying it because adversaries have significantly raised the stakes.

In 2024, crypto platforms suffered $2.2 billion in stolen funds—a 21% rise over 2023—as attacks climbed from 282 to 303 incidents.

That year, breakthrough cases included the WazirX hack in July, where around $235 million was siphoned by North Korea’s Lazarus Group via manipulation of multisignature controls

The ramp-up has accelerated into 2025. In just the first half, losses surged past $2.17 billion, exceeding the full year of 2024—with the record-breaking $1.5 billion ByBit heist accounting for most of it and traced back to DPRK-linked actors

Beyond exchange breaches, fraud has diversified. AI-powered scams—like deepfake impersonations, investment fraud, pig-butchering romance schemes, and synthetic identity schemes—are exploding: crypto scam losses reached $4.6 billion in 2024 thanks to AI-powered deception, and overall fraud surged by 456% between mid-2024 and mid-2025

Criminals also increasingly launder funds through mixers and stablecoins. In 2024, illicit wallets received up to $51 billion, with stablecoins, prized for liquidity and anonymity, accounting for 63% of illicit transaction volume

This underscores how traditional laundering paths have evolved.

Regulatory pressure has ramped in tandem. The FATF has called for stronger global action: as of April 2025, only 40 of 138 jurisdictions were deemed “largely compliant” with its crypto standards, signaling widespread regulatory gaps

FATF also emphasized global risks from stablecoin and DeFi misuse and telegraphed forthcoming scrutiny

Given this threat proliferation, rule-based detection systems—static thresholds, manual rulesets—fail to scale. They falter against high-velocity, AI-driven scams, synthetic identities, and obfuscated laundering through mixers and stablecoins. Automation and adaptive intelligence are now essential for resilience.

Core AI techniques used by exchanges

Supervised models & anomaly scoring

Supervised learning underpins much of today’s KYC and transaction risk analysis. Exchanges train gradient-boosted trees or neural nets on labelled examples of legitimate versus suspicious accounts and transfers.

Features often include transaction velocity (e.g. bursts of small withdrawals), counterparty risk scores, geolocation mismatches, and device/browser fingerprints.

Labels come from prior confirmed fraud cases, law-enforcement referrals, and internal investigations. The “cold-start” problem—where new users or novel scams have no history—remains a challenge; some exchanges mitigate it by combining supervised models with unsupervised anomaly detectors to flag unusual behaviour before labels exist.

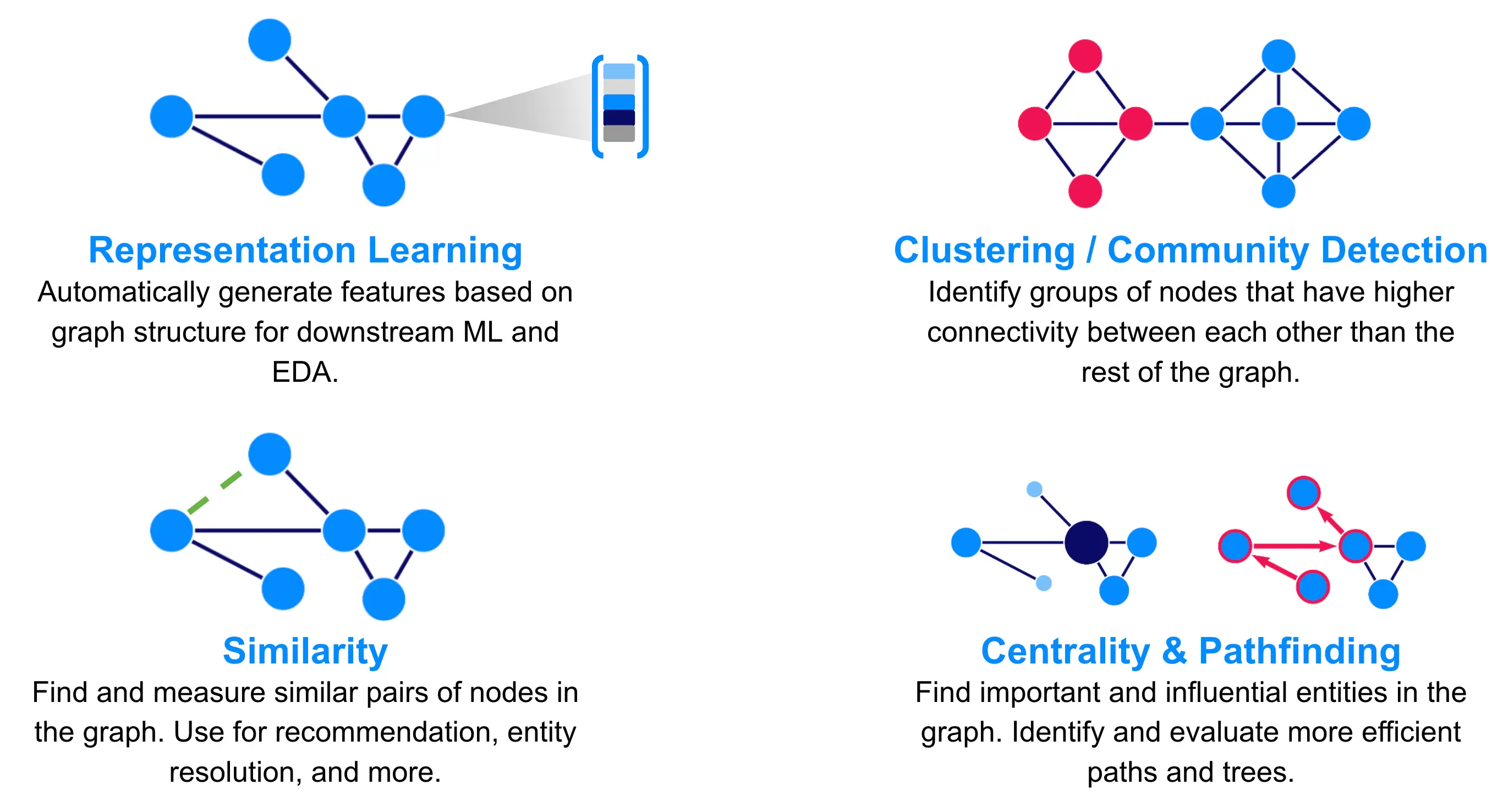

Graph ML & Graph Neural Networks (GNNs) for on-chain behaviour

On-chain data is inherently relational: addresses send to addresses, which interact via contracts and intermediaries. Graph ML represents this as a network, where nodes are wallets/contracts and edges are transactions with attributes (amount, token type, timestamp).

Graph Neural Networks propagate information across multiple hops, learning money-flow patterns and community structures that tabular models miss.

This lets them uncover layered laundering paths through mixers or cross-asset swaps. Recent research — [e.g. placeholder for Nature/arXiv citation] — demonstrates that GNNs outperform baselines in detecting illicit subgraphs in cryptocurrency transaction networks.

As adversaries adopt obfuscation, AI-Enhanced Fraud Detection in Crypto Exchanges increasingly relies on such graph-based approaches to preserve visibility across the full transaction graph.

Temporal / sequence models

Transactions and user actions form time-ordered sequences. Models like LSTMs and Transformer variants learn temporal dependencies: for example, a rapid alternation between deposit/withdrawal in specific token pairs can indicate laundering, while repeated login-transfer-logout cycles from new devices may suggest replay attacks.

Sequence models can also segment behaviours into “normal” patterns (e.g. regular trading) versus synthetic activity generated by bots. Exchanges use these to reduce false positives by considering the order and timing of events, not just static snapshots.

Unsupervised anomaly detection & clustering

When labels are scarce or zero-day tactics emerge, unsupervised methods are invaluable. Autoencoders compress transaction feature vectors and flag high-reconstruction-error cases as anomalies; isolation forests recursively partition data to isolate rare points.

Clustering can reveal new scam rings or laundering routes by grouping addresses with similar behavioural signatures. These methods can surface threats that haven’t yet been codified into rules, providing early warning for investigator review.

Hybrid systems & human-in-the-loop workflows

No model is perfect, so leading exchanges combine them in hybrid pipelines. An AI layer triages alerts from supervised, graph, sequence, and anomaly detectors, ranking them by confidence.

Human analysts then investigate high-priority cases, applying contextual knowledge and cross-platform intelligence. Feedback from these reviews—confirmed fraud, false positive, benign irregularity—feeds back into retraining, closing the loop.

Chainalysis’ “Rapid” platform, for instance, uses AI to generate concise investigative summaries, cutting analyst time-to-insight and enabling faster response to unfolding attacks.

Data: The Fuel and the Friction

Effective fraud detection in crypto exchanges depends on integrating both on-chain and off-chain data sources. On-chain data includes wallet-to-wallet flows, public transaction chains, and token swap histories.

Off-chain signals encompass exchange order-book activity, IP and device telemetry (fingerprints, login patterns), and richer identity artifacts like KYC documentation.

However, label scarcity remains a substantial hurdle. True fraud instances are rare relative to the volume of clean activity, making supervised training datasets limited and imbalanced.

Privacy concerns compound this—KYC and telemetry data are highly regulated, and exchanges must balance anti-fraud capability with user confidentiality.

Recognizing this, collaborative efforts have begun to surface. Notably, Elliptic, MIT, and IBM released an unprecedented 200-million-transaction dataset of blockchain subgraphs indicative of money-laundering behavior—designed to help train detection models without revealing personally identifiable data

These shared datasets exemplify how anonymized structural patterns can fuel research.

Simultaneously, organizations like Wired have spotlighted such data releases as critical stepping stones for community-wide advances in AI-driven crypto analytics

Data governance also plays a pivotal role—exchanges must define retention policies, access controls, and compliance influence. Regulatory regimes may require holding certain user data for defined periods, while minimizing retention of sensitive details once risk subsides.

Moreover, differential privacy offers a mathematically sound way to share aggregate or anonymized insights—allowing federated analytics and model training while limiting exposure of individual-level data

When combined properly, these signal stacks—merging on-chain transaction graphs, off-chain telemetry, and KYC-derived features—empower more robust detections with AI-Enhanced Fraud Detection in Crypto Exchanges.

Real-world architecture: how exchanges stitch AI into operations

In production, exchanges weave together layered detection systems that can respond to threats in milliseconds. A typical real-time pipeline begins with streaming ingestion of on-chain events, order-book updates, and off-chain telemetry.

These feeds are parsed and normalized before being passed into one or more model-scoring layers. The architecture often combines AI-Enhanced Fraud Detection in Crypto Exchanges with legacy rule engines, allowing the system to blend adaptive intelligence with regulatory-friendly, auditable conditions.

Once scores are generated, a decision layer applies policy: routing transactions to automated blocking, throttling, or placement into “risk buckets” for further review. High-risk cases may trigger immediate freezes, while medium-risk flows go to analyst queues.

Chainalysis KYT (“Know Your Transaction”) and Elliptic Navigator exemplify vendor solutions that integrate model outputs into compliance dashboards, enabling investigators to explore suspicious wallet flows in context.

Latency vs. model complexity is a constant balancing act. Lightweight scorers—such as linear models or gradient-boosted trees—are deployed inline for sub-second scoring of all transactions.

Heavier models like GNNs or temporal Transformers, which require aggregating multi-hop network data or long activity sequences, are often run asynchronously or on subsets of flagged cases.

This ensures the exchange can process high-volume transaction streams without introducing user-visible delays, while still applying deep analytics where it matters most.

Automation is critical, but human-in-the-loop review remains essential. Many architectures feature dual pipelines: a “fast lane” for blocking egregious threats and a “deep lane” for cases that require enrichment with external intelligence feeds.

Alerts from both lanes feed into unified analyst tools, where transaction visualizations, wallet histories, and AI-generated summaries help speed investigations.

Ultimately, the operational blueprint blends speed, depth, and compliance—ensuring exchanges can act on AI insights without sacrificing customer experience or regulatory obligations.

Case Studies & Mini Post-Mortems

A High-Value Heist Detected Too Late

The crypto thefts surge of over $2.17 billion in the first half of 2025 is largely driven by the record-setting $1.5 billion ByBit hack—the single largest crypto heist in history

The sheer scale of the attack, attributed to state-sponsored actors, overwhelmed traditional monitoring pipelines. Rule-based systems relied on predefined thresholds—such as unusually large withdrawals—but were unable to scale fast enough or detect sophisticated multi-hop laundering patterns.

Had AI-Enhanced Fraud Detection in Crypto Exchanges been more deeply embedded, lightweight anomaly detectors could have flagged subtle deviations in fund flow velocity or geolocation, triggering earlier holds and investigator alerts.

In other words, without adaptive AI that learns from evolving attacker behavior, analysts often receive the alert only after funds have already left the system.

Successful Interdiction Using Graph ML

In contrast, Elliptic’s recent deployment of graph-ML–based behavioral detection offers a compelling success story. Their system automatically identifies “pig butchering” and laundering patterns—like sequential baiting transfers followed by large deposits—and flags them within the Elliptic Investigator tool

In one case, the platform detected interconnected wallets funneling funds through an illicit chain involving peel chains and nested services, enabling an exchange to freeze suspect deposits swiftly and proactively disrupt the laundering cycle

Key Lessons Learned

- Signal integration matters: Combining on-chain flow patterns with off-chain risk signals significantly improves detection sensitivity—acting faster than isolated rule rules.

- Human plus AI workflows: The best results emerge when AI-driven alerts channel into human analyst review, where context and investigation nuance avoid both under- and over-alerting.

- Managing false positives: AI tools must be calibrated to minimize false positives—too many alerts erode trust. Tiered scoring (low/medium/high risk) and feedback loops for retraining models help tune precision over time.

These case studies underscore that AI-driven systems aren’t just bells and whistles—they’re mission-critical in both preventing large-scale thefts and stopping illicit chains early.

Risks, Blind Spots, and the Adversarial Arms Race

At the forefront of today’s fraud landscape, AI-Enhanced Fraud Detection in Crypto Exchanges must contend with increasingly sophisticated evasion tactics—often hidden within seemingly innocuous inputs.

One emerging threat is the use of synthetic identities and AI-generated fake personas, especially deepfakes, to bypass KYC systems.

Cybercriminals deploy so-called “Repeaters”—slightly varied synthetic identities enhanced with deepfake technology—to test and exploit verification processes across platforms.

These evolve over multiple submissions, evading detection by mimicking legitimate behavior just enough to slip through isolated checks

Beyond deepfakes, adversarial evasion techniques allow fraudsters to create inputs that mislead AI models—causing misclassification despite appearing innocuous to humans

Attackers might also poison training datasets or embed subtle backdoors, undermining the robustness of detection systems.

False positives, another major blind spot, come at a non-trivial cost. When legitimate users are flagged as fraudulent, the consequences can include user frustration, lost transactions, increased support costs, and ultimately, revenue loss—plus potential reputation damage.

Overly aggressive blocking risks alienating users and may even cross regulatory lines by unjustifiably limiting access or services.

From an ethical and governance standpoint, the use of opaque or “black-box” AI models raises serious concerns. Regulators increasingly demand transparency and explainability—especially where decisions affect users’ financial freedom.

Auditable, interpretable models or explainable AI (XAI) techniques are critical to maintaining compliance, building user trust, and ensuring fair treatment.

In sum, exchanges must navigate a delicate balance: advancing detection fidelity while staying resilient against adversarial manipulation, minimizing user disruption, and upholding ethical standards. It’s an arms race—one where AI defenders must raise the bar just to keep up.

Compliance, Policy, and Global Coordination

Global regulatory bodies—most notably the FATF—have increasingly shaped how crypto exchanges must monitor and detect illicit activity.

As part of its virtual asset standards, the FATF mandates that Virtual Asset Service Providers (VASPs) implement customer due diligence (CDD), record keeping, suspicious transaction reporting (STRs), and the “Travel Rule” for sharing sender and recipient data in transfers

Its latest update (June 2025) revealed that only 40 of 138 jurisdictions assessed were “largely compliant” with its crypto standards as of April 2025—highlighting persistent implementation gaps worldwide

To bridge detection and enforcement gaps, data-sharing consortiums have become indispensable. These industry coalitions and consortiums allow VASPs to anonymously share behavioral patterns, perpetrator markers, and case resolution insights—enabling faster identification of cross-platform fraud and laundering trends

Privacy-enhancing methods like federated learning, homomorphic encryption, and differential privacy can preserve user confidentiality while facilitating valuable signal sharing

For VASPs aiming to stay ahead, a risk-based approach—grounded in documented policies and transparent model governance—is essential. Exchanges should:

- Conduct regular risk assessments across jurisdictions and asset types.

- Maintain robust governance frameworks covering AI model validation, auditability, and periodic review.

- Document and justify the design of detection models and decision thresholds, aligning with both FATF expectations and internal compliance protocols.

These measures ensure VASPs not only meet regulatory obligations but also uphold trusted, resilient operations—with AI detection systems that are accountable, transparent, and scalable.

Conclusion

In the near term, we’re entering a high-stakes AI arms race: fraudsters are embracing generative tools to craft ever-more convincing scams, while exchanges ramp up deployment of GNNs and temporal transformer models to stay ahead

This escalating duel promises both increasing sophistication on the attack side and rapid defensive innovation.

Looking further ahead, the industry is moving toward interoperable alert standards—imagine real-time cross-platform flagging akin to OFAC screening meeting KYT protocols—and highly automated forensic tooling that can trace illicit flows across ecosystems instantly.

The evolution of AI-Enhanced Fraud Detection in Crypto Exchanges will increasingly define the resilience and integrity of the crypto sector.

FAQ

What is AI-enhanced fraud detection and how is it different from rules-based monitoring?

AI-enhanced fraud detection uses machine learning models to adapt to evolving threats, unlike static rules-based systems that rely on predefined patterns.

Can AI completely stop exchange hacks?

No. AI reduces risk and speeds detection, but it can’t guarantee prevention. Human oversight and layered security remain essential.

How do GNNs detect money laundering?

Graph Neural Networks analyze transaction relationships and flow patterns, making it easier to spot suspicious chains across wallets.

What privacy risks come with AI monitoring?

Risks include potential misuse of personal data and over-collection. Privacy-by-design and differential privacy help mitigate these.

How do regulators view AI-based detection?

Regulators increasingly encourage AI tools, but expect explainability, proper governance, and compliance with AML/KYC standards.

Are AI systems prone to false positives?

Yes. Poorly tuned models may over-flag legitimate activity, causing user friction and operational costs.