Google is attempting to create waves with Google Gemini their main suite of generative AI models, apps, and services

So, what is Gemini? How may it be used? And how does it compare to the competition?

We’ve compiled this handy guide to help you stay current on Gemini developments. It will be updated as new Gemini models, features, and news about Google’s ambitions for Gemini become available.

What is Gemini?

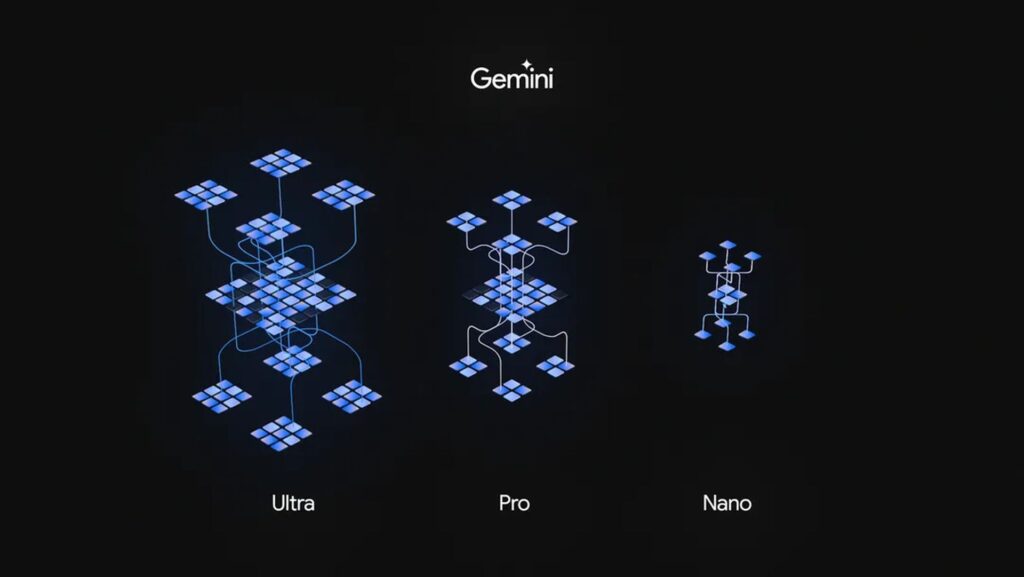

Gemini is Google’s long-awaited next-generation GenAI model family, created by Google’s AI research laboratories DeepMind and Google Research. It comes in three flavors:

Gemini Ultra is the highest-performing Gemini model.

Gemini Pro is a “lite” Gemini variant.

Gemini Nano is a tiny, “distilled” edition that operates on mobile devices such as the Pixel 8 Pro.

All Gemini models were trained to be “natively multimodal”—that is, able to work with and employ more than words. They were pre-trained and fine-tuned using various music, photos, videos, codebases, and text in several languages.

This distinguishes Gemini from models like Google’s LaMDA, which was trained solely on textual input. LaMDA cannot understand or generate anything besides text (such as essays or email drafts), whereas Gemini models can.

What is the difference between Gemini apps and Gemini models?

Once again demonstrating its need for branding skills, Google needed to make it evident from the start that Gemini is unique from the Gemini apps for the web and mobile (previously Bard). The Gemini applications are just interfaces that allow you to access specific Gemini models—think of them clients for Google GenAI.

Furthermore, the Gemini apps and models are entirely independent of Imagen 2, Google’s text-to-image model included in some of the company’s development tools and environments.

What can Gemini do?

Because the Gemini models are multimodal, they may perform various tasks, including speech transcription, image and video captioning, and artwork generation. Some of these features have yet to be released as products (more on that later), but Google promises that all of them—and more—will be available soon.

Of course, it is challenging to believe the company’s claims.

Google could have performed better with the initial Bard launch. More recently, it stirred feathers with a film professing to demonstrate Gemini’s capabilities, which turned out to be extensively doctored and more or less aspirational.

Still, if Google’s promises are more or less accurate, here’s what the various tiers of Gemini will be able to perform once they reach their full potential:

Google’s Gemini Ultra, with its multimodality, can assist with physics homework, step-by-step worksheet solutions, and identifying errors in previously completed answers.

According to Google, Gemini Ultra can also locate scientific publications relevant to a specific topic, extract information from those papers, and “update” a chart by creating the formulas required to recreate the graphic with more recent data.

As previously mentioned, Gemini Ultra allows picture generation. However, such capacity has yet to be included in the model’s productized form, maybe because the technique is more difficult than how apps like ChatGPT generate photos. Rather than feeding cues to an image generator (like DALL-E 3 does in ChatGPT), Gemini produces images “natively” without the need for an intermediate step.

Gemini Ultra is offered as an API via Vertex AI, Google’s fully managed AI developer platform, and AI Studio, a web-based tool for app and platform developers. It also powers the Gemini apps—but not for free. Access to Gemini Ultra via Gemini Advanced requires a monthly subscription to Google’s One AI Premium Plan, which costs $20.

The AI Premium Plan also connects Gemini to your larger Google Workspace account, which includes Gmail emails, Docs papers, Sheets presentations, and Google Meet recordings. This is great for, say, summarizing emails or having Gemini take notes during a video conversation.

Gemini Pro

Google claims that Gemini Pro outperforms LaMDA regarding reasoning, planning, and understanding.

An independent study by Carnegie Mellon and BerriAI researchers discovered that the original version of Gemini Pro outperformed OpenAI’s GPT-3.5 in handling longer and more complicated reasoning chains. However, the study found that, like all large language models, this version of Gemini Pro struggled with arithmetic issues involving several digits, and users identified cases of poor reasoning and clear errors.

Google promised cures, and the first came as Gemini 1.5 Pro.

Gemini 1.5 Pro is designed to be a drop-in replacement and improves in several areas over its predecessor, most notably the amount of data it can process. Gemini 1.5 Pro can hold around 700,000 words or 30,000 lines of code, which is 35x more than Gemini 1.0 Pro’s capacity. And, as the model is multimodal, it is not confined to text.

Gemini 1.5 Pro can analyze up to 11 hours of audio or an hour of video in a variety of languages, albeit slowly (for example, looking for a scene in an hour-long movie takes 30 seconds to a minute to process).

In April, Gemini 1.5 Pro became a public preview on Vertex AI.

An additional endpoint, Gemini Pro Vision, can interpret text and imagery—including images and videos—and generate text similar to OpenAI’s GPT-4 with the Vision model.

Gemini Pro can be fine-tuned or “grounded” to specific situations and use cases within Vertex AI by developers. Gemini Pro can also be connected to other third-party APIs to accomplish particular tasks.

AI Studio includes methods for building structured conversation prompts with Gemini Pro. Developers have access to both the Gemini Pro and Gemini Pro Vision endpoints, and they may alter the model temperature to manage the output’s creative range, provide examples to give tone and style guidelines and fine-tune the safety parameters.

Gemini Nano

Gemini Nano is a significantly smaller version of the Gemini Pro and Ultra variants. It is efficient enough to perform tasks directly on (certain) phones rather than sending them to a server. So far, it drives several functions on the Pixel 8 Pro, Pixel 8, and Samsung Galaxy S24, such as Summarize in Recorder and Smart Reply in Gboard.

The Recorder app allows users to record and transcribe audio with a single button press. It features a Gemini-powered summary of recorded conversations, interviews, presentations, and other samples. Users receive these summaries even when no signal or Wi-Fi connection is available and no data is sent from their phones throughout the process to protect their privacy.

Gemini Nano also appears on Gboard, Google’s keyboard app. It enables a function called Smart Reply, which suggests what you should say next while discussing in a messaging app. According to Google, the feature will initially only work with WhatsApp but will eventually be available in other apps.

Nano also supports Magic Compose in the Google Messages app for supported smartphones, allowing users to create messages in styles such as “excited,” “formal,” and “lyrical.”

Is Gemini better than OpenAI’s GPT-4?

Google has repeatedly emphasized Gemini’s advantage in benchmarking, claiming that Gemini Ultra outperforms current state-of-the-art results on “30 of the 32 widely used academic benchmarks used in large language model research and development.” Meanwhile, the business claims that Gemini 1.5 Pro outperforms Gemini Ultra in various instances, such as summarizing content, brainstorming, and writing; this is likely to change with the release of the next Ultra model.

Leaving aside whether benchmarks imply a superior model, Google’s scores are only marginally better than those of similar OpenAI models. As previously stated, some early impressions have been negative, with users and scholars pointing out that the older version of Gemini Pro incorrectly interprets basic facts, has problems with translations, and provides poor code suggestions.

How much does the Gemini cost?

Gemini 1.5 Pro is now free to use in the Gemini apps, as well as AI Studio and Vertex AI.

When Gemini 1.5 Pro exits preview in Vertex, the model costs $0.0025 per character, whereas the output costs $0.00005 per character. Vertex clients pay per 1,000 characters (about 140 to 250 words) or, in the case of models such as Gemini Pro Vision, each image ($0.0025).

Let’s say a 500-word article has 2,000 characters. Summarizing the article with Gemini 1.5 Pro would cost $5. Meanwhile, creating an article of comparable length would cost $0.1.

Ultra price has yet to be released.

Where can you try Gemini?

Gemini Pro

Gemini Pro is most easily experienced in the Gemini apps. Pro and Ultra are answering questions in a variety of languages.

Gemini Pro and Ultra are also available for preview in Vertex AI via an API. The API is free to use “within limits” and covers specific countries, including Europe, and capabilities such as chat functionality and filters.

In addition, Gemini Pro and Ultra can be found in AI Studio. Developers can use the service to iterate prompts and Gemini-based chatbots before receiving API keys to use them in their apps — or export the code to a more feature-rich IDE.

Code Assist (formerly Duet AI for Developers), Google’s AI-powered code completion and generation tools, uses Gemini models. Developers can make “large-scale” changes to codebases, such as updating cross-file dependencies and reviewing large amounts of code.

Google has integrated Gemini models into its Chrome and Firebase mobile development platforms and its database creation and management tools. It’s introduced new security products based on Gemini, such as Gemini in Threat Intelligence, a component of Google’s Mandiant cybersecurity platform that can analyze large chunks of potentially malicious code and allow users to perform natural language searches for ongoing threats or indicators of compromise.

Gemini Nano

Gemini Nano is currently available on the Pixel 8 Pro, Pixel 8, and Samsung Galaxy S24, with plans to expand to other devices. Developers wanting to include the model in their Android apps can join for a sneak peek.

Is Gemini coming to iPhone?

It might! Apple and Google are talking about using Gemini to power a variety of features in a forthcoming iOS version later this year. Everything is still being determined, as Apple is also apparently negotiating with OpenAI and building its own GenAI capabilities.