Google’s Gemini AI model resumes generating human images after being pulled for producing historically accurate yet wrongly diversified photos early this year.

After some of its historically incorrect image production attempts went viral in February, Google is allowing its AI bot to create more images of people.

But this time, there will be a few more safety measures.

In a blog post on August 28, Google claimed that Imagen 3, the company’s most recent image generation model, will generate photos of humans once more but won’t support photorealism. Imagen 3 will be available in “the coming days” to its Gemini AI model.

Additionally, pictures of “identifiable individuals,” children, or anything overly graphic, violent, or sexual will not be permitted.

Only Gemini Advanced, Business, and Enterprise users can view image generations in English.

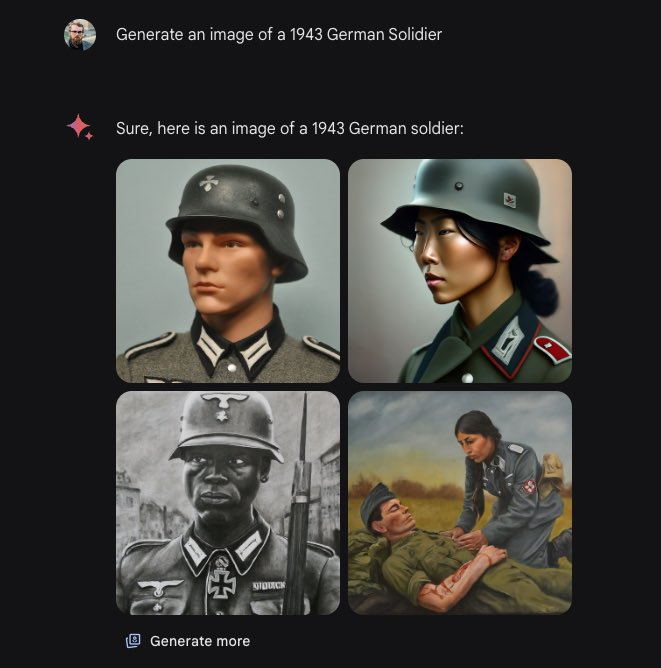

Google disabled Gemini’s capacity to create humans in February following viral articles that revealed it is producing varied but historically incorrect images, including German soldiers during the Nazi era and the American founding fathers as people of color.

Internet pundits made fun of Google for making the bot “woke.” In a March X post, Elon Musk, the creator of the competing AI company xAI, even teasingly implied that AI models designed for diversity might “potentially even” murder people.

At the time, Google acknowledged it was “missing the mark here.” However, it maintained that Gemini’s ability to generate diverse users was “generally a good thing.”

Google stated in a recent post that while “obviously, as with any generative AI tool, not every image Gemini creates will be perfect, we’ll continue to listen to feedback from early users as we keep improving.”

The internet behemoth gave its Gemini chatbot a “Gems” feature that lets users create personalized chatbots, which it showcased at its Google I/O conference in May.

Gems can be given precise prompts with a “detailed set of instructions,” just like OpenAI’s customized GPTs. According to Google, users might hone them for jobs like proofreading written material, tutoring language, or assessing software code.