Google announced Monday that it would require advertisers to disclose election advertising that employs digitally changed video to simulate natural or realistic persons or events, its latest move to combat election misinformation

The disclosure requirements under the political content policy have been revised, necessitating that marketers select a checkbox in the “altered or synthetic content” section of their campaign settings.

The potential misuse of generative AI has been a source of concern due to its rapid expansion, which enables it to generate text, images, and video in a matter of seconds in response to queries.

The lines between the real and the fake have been further blurred by the emergence of deepfakes, which are content that has been persuasively manipulated to misrepresent an individual.

Google has announced that it will produce an in-ad disclosure for feeds and snippets on mobile phones, as well as in-streams on computers and television. Advertisers must provide users with a “prominent disclosure” readily apparent in other formats.

Google stated that the “acceptable disclosure language” will differ based on the context of the advertisement.

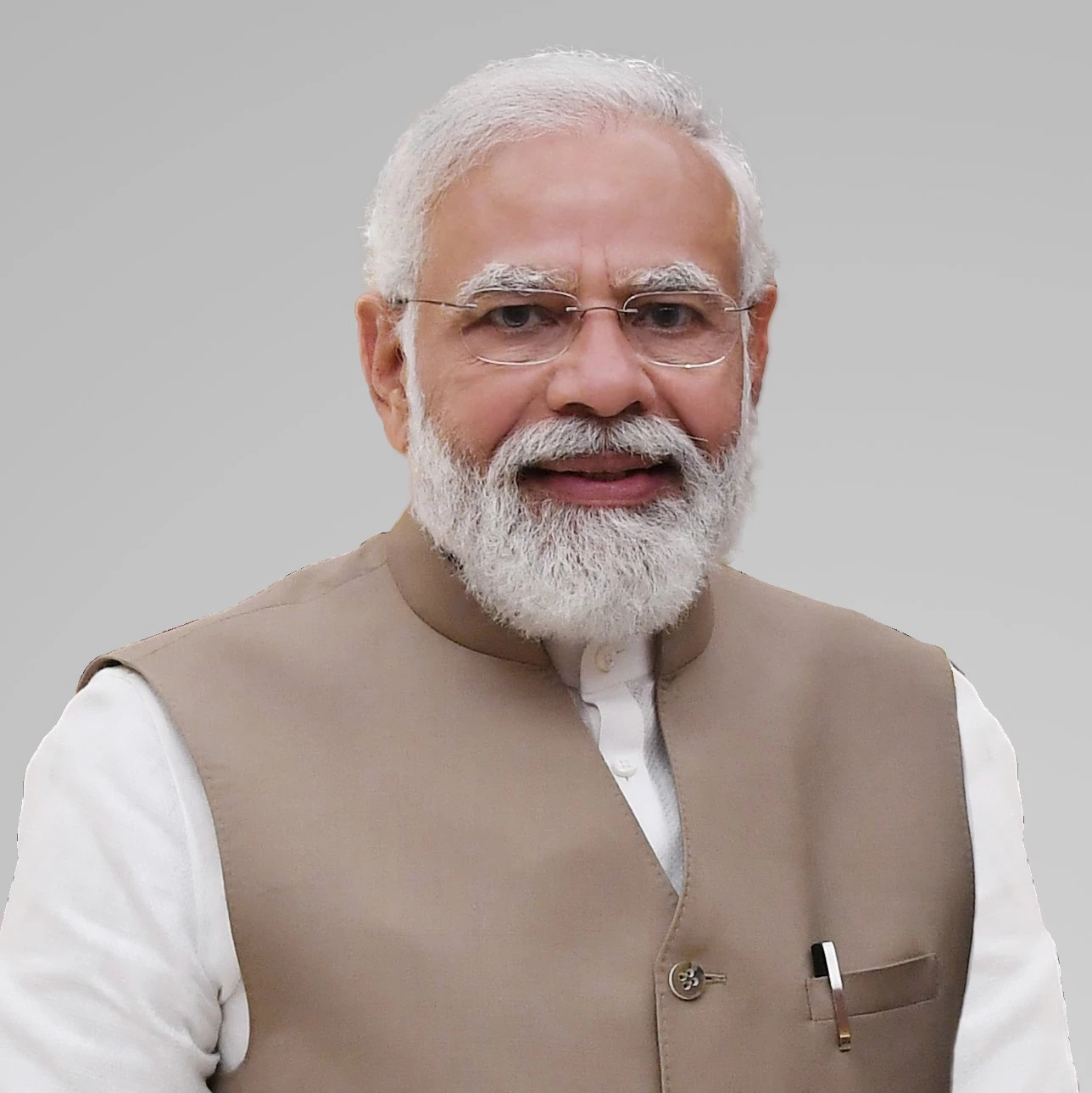

Fake recordings of two Bollywood actors who were seen criticizing Prime Minister Narendra Modi went viral online during the ongoing general election in India in April. The AI-generated videos both urged individuals to vote for the Congress party, which is the opposition party.

In May, OpenAI, which Sam Altman leads, announced that it had disrupted five covert influence operations that aimed to use its AI models for “deceptive activity” across the internet in an “attempt to manipulate public opinion or influence political outcomes.”

Last year, Meta Platforms announced that it would require advertisers to disclose whether Facebook and Instagram are being used to modify or create political, social, or election-related advertisements using AI or other digital tools.