GPT-4o unveiling done by OpenAI to now power ChatGPT with additional features, including video access.

On Monday, OpenAI unveiled GPT-4o, their new premier generative AI model; the “o” signifies “omni,” denoting the model’s capability to process text, speech, and video. GPT-4o is anticipated to be implemented “iteratively” in the coming weeks across the organization’s developer and consumer-facing products.

GPT-4o offers “GPT-4-level” intelligence, according to OpenAI CTO Mira Murati, but its capabilities across numerous modalities and media are enhanced.

“GPT-4o reasoned across voice, text, and vision,” Murati stated Monday at the OpenAI offices in San Francisco during a streamed presentation. “Moreover, this is of the utmost importance, as we are considering the future of human-machine interaction.”

OpenAI’s previous “leading” and “most advanced” model, GPT-4 Turbo, was trained on a combination of text and images and could analyze both data types to perform tasks such as text extraction from images and image content description. On the contrary, GPT-4o incorporates speech recognition.

What does this facilitate? An assortment of items.

GPT-4o significantly enhances the user experience of ChatGPT, an AI-powered chatbot developed by OpenAI. GPT-4o amplifies the platform’s vocal mode, which previously utilized a text-to-speech model to transcribe the chatbot’s replies. As a result, users can now engage with ChatGPT in a manner more akin to that of an assistant.

One illustrative instance involves users posing a query to the GPT-4o-powered ChatGPT and subsequently interrupting its response. According to OpenAI, the model exhibits “real-time” responsiveness and can detect subtleties in a user’s voice to generate voices in “a variety of different emotive styles” (including singing).

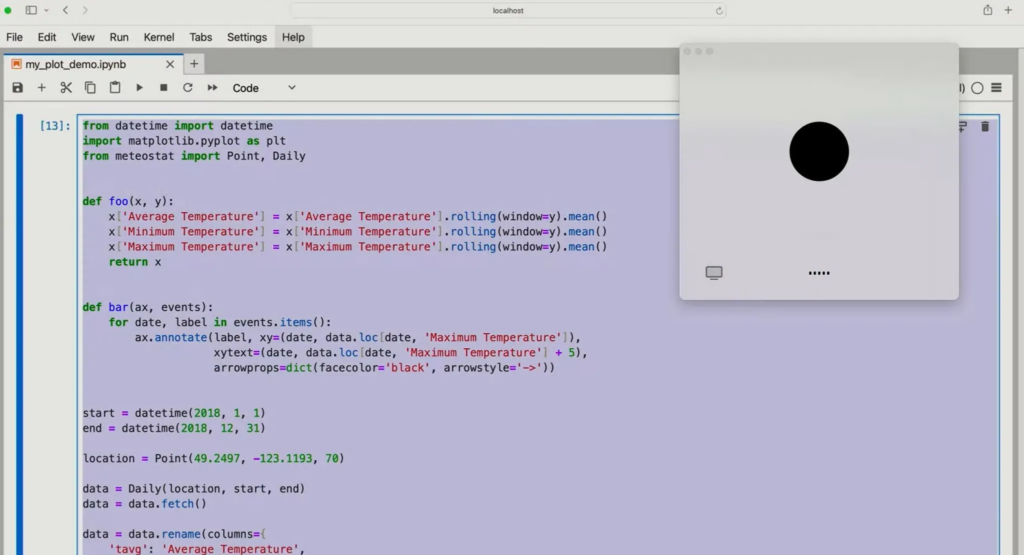

Additionally, GPT-4o enhances ChatGPT’s vision capabilities. ChatGPT can promptly respond to inquiries about a given image or desktop screen. These inquiries may cover a wide range of topics, including “What is the purpose of this software code?” and “Which brand of shirt is this individual wearing?”

Murati asserts that these characteristics will continue to develop. Presently, GPT-4o can translate a menu image displayed in a foreign language. However, its capabilities may expand in the future to enable ChatGPT to “watch” a live sports game and provide an explanation of its principles.

“We are aware that these models are becoming ever more complex, but we want the interaction experience to become more natural and effortless. Instead of worrying about the user interface, please concentrate on your partnership with ChatGPT,” Murati explained. “Over the last few years, our primary objective has been to enhance the intelligence of these models […] However, this is the first time we are advancing significantly regarding usability.”

OpenAI asserts that GPT-4o is more multilingual, with improved efficacy in approximately fifty languages. Additionally, according to OpenAI’s API, GPT-4o is twice as quick, costs half as much, and has higher rate limits than GPT-4 Turbo.

Voice is not currently available via the GPT-4o API for all clients. OpenAI plans to initially introduce support for GPT-4o’s new audio capabilities in the coming weeks, citing “a small group of trusted partners” as the reason, citing the risk of misuse.

As of today, GPT-4o is accessible to users of the free ChatGPT tier, as well as subscribers of the ChatGPT Plus and Team plans from OpenAI, which feature message limits that are “five times higher.” (OpenAI notes that when users reach the rate limit, ChatGPT will automatically transition to GPT-3.5, an older and less capable model.) In approximately one month, the enhanced ChatGPT voice experience supported by GPT-4o will be available in alpha for Plus users and enterprise-oriented alternatives.

OpenAI also unveiled a desktop version of ChatGPT for macOS that enables users to pose queries via a keyboard shortcut or capture and share screenshots, in addition to a redesigned ChatGPT user interface (UI) for the web that features a “more conversational” home screen and message flow. Users of ChatGPT Plus will have early access to the application beginning today; a Windows version will be available later in the year.

The GPT Store, an external repository of third-party chatbots developed by OpenAI using its AI models, is presently accessible to users of the free tier of ChatGPT. ChatGPT offers free users access to previously paid features, such as a memory capability that allows the application to “remember” user preferences for subsequent interactions.