Experts in cybersecurity and artificial intelligence provide their perspectives on the social media titan’s use of ‘Made with Meta’s AI’ labels.

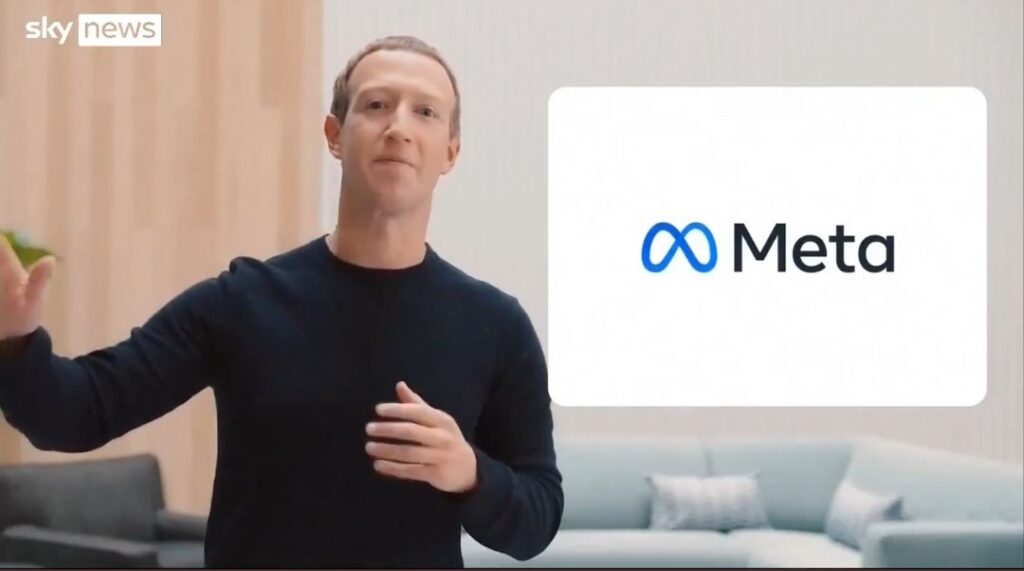

Earlier this month, Meta revised its four-year-old manipulated media policy in response to the substantial surge in AI-generated content across its platforms.

Beginning in May of this year, artificial intelligence-generated images, audio, and video on Instagram and Facebook will be accompanied by “Made with AI” labels. Similarly, digitally altered videos and photos will also carry comparable labels.

While the new policy aims to reconcile internet safety with Meta’s user trends, the question remains: does it adequately address the issue of misinformation? Experts in the industry assert that it is a complex challenge.

What prompted Meta’s AI Label to happen?

“Meta’s introduction of AI content and manipulated media labels is a positive step, but it might not fully address the complexities of AI-generated and manipulated content,” Alon Yamin pointed out to ExtremeTech.

Yamin is the CEO and co-founder of Copyleaks, an online platform that assists users in identifying instances of plagiarism or AI-generated text.

The suite includes the AI Content Detector browser extension, which helps users identify artificially generated content on news platforms, social media, and review sites, among other online environments. “But looking ahead to the 2024 election, Meta’s initiative could be seen as a proactive step in safeguarding online discourse integrity amidst increasing scrutiny of digital information.”

In fact, Meta (Meta’s AI) decided to start labeling digitally altered and AI-generated content in anticipation of the upcoming US election cycle—or, depending on one’s viewpoint, while it was still in progress.

Meta drafted its policy on manipulated media in 2020, a time when realistic AI content was considerably less prevalent than it is today. In May 2023, a Facebook user uploaded a modified video that featured an error, depicting President Biden inappropriately caressing his adult granddaughter.

Using a genuine (and considerably less harmful) clip from the midterm elections six months prior, when Biden and his granddaughter cast ballots, the altered video was created.

At the time, Meta’s manipulated media policy did not include audiovisual content or account for any alterations made without AI (Meta’s AI). This necessitated that Meta maintain the video online in accordance with its own policy despite the fact that it embodied the definition of misinformation from a textbook and posed a threat to voters in the impending election.

Meta’s Oversight Board advised the company, in light of its investigation into the incident above, to “reevaluate the extent to which its manipulated media policy encompasses audio and audiovisual content, content that portrays individuals performing actions or making statements they did not make, and content irrespective of its creation or alteration process.”

As per Meta’s announcement on April 5, the public, academic institutions, and civil society organizations all expressed support for revising Meta’s policy regarding manipulated media.

“Made with AI” labels will now appear at the top of posts containing video, audio, and images modified or created using AI, as inferred from user disclosures or common indicators.

Meta will add a more conspicuous label with context to content it deems “a significantly high risk of materially misleading the public on an important matter” if it determines that such content was altered or generated by artificial intelligence.

Content generated or modified by artificial intelligence will not be removed by Meta unless it violates its Community Standards. However, Meta will diminish the visibility of content deemed “altered” or “false” by its network of fact-checkers, thereby preventing a more significant number of users from encountering it in their feeds.

Yamin informed us that it is encouraging that Meta performed that revision without legal support. “Considering that Facebook and Instagram, both owned by Meta, were big proponents of spreading misinformation during the last election, Meta potentially feels the most pressure around proper generative AI regulation,” he said. “As a leader in its field, Meta frequently establishes benchmarks by addressing public apprehensions regarding the veracity of information and misinformation.”

This action calls for solid safeguards and can be considered a model for other platforms encountering comparable pressures.

“Considering that Facebook and Instagram, both owned by Meta, were big proponents of spreading misinformation during the last election, Meta potentially feels the most pressure around proper generative AI regulation

With the introduction of mandatory AI-generated content labels by other platforms, including YouTube such disclosures may eventually become standard practice.

The Broader Context: A Closer Look at the Occurrences of Meta’s AI Label Creation

Lisa Plaggemier, executive director of the National Cybersecurity Alliance, asserts that there is an unprecedentedly critical demand for content identifiers generated by artificial intelligence. “Cybercriminals are leveraging AI in sophisticated ways to manipulate public perception on sensitive topics like elections,” she says.

Plaggemier mentioned deepfakes, which have been implemented in illegitimate Zoom interviews and retribution pornography, among other things. Plaggemier asserts that deepfakes are employed to fabricate the actions of elected officials or candidates they have yet to undertake.

“By leveraging (Meta’s AI), cybercriminals can rapidly produce and disseminate these misleading materials across social media platforms, where they can quickly go viral and spread misinformation,” according to her.

Neither are these isolated occurrences. According to Plaggemier, deepfakes and other fraudulently generated AI content are frequently employed in misinformation campaigns to amplify false narratives or perplex the public.

She asserted, “These bots can create the illusion of widespread public support or opposition for certain candidates or policies by flooding online platforms with coordinated messages.”

“Through the automation of these processes, cybercriminals can manipulate popular subjects and distort digital dialogues, thereby impeding the public’s ability to differentiate authentic information from manipulated material.” Consequently, artificial intelligence is progressively employed to exploit weaknesses in public discourse and taint the legitimacy of democratic procedures.

This was demonstrated two months ago when threat actors generated robocalls that purported to originate from Biden using artificial intelligence. Voters were encouraged not to participate in the New Hampshire primaries via phone calls.

Although the campaign led to the Federal Communications Commission (FCC) prohibiting AI-generated robocalls, threat actors will probably transition to alternative methods of disseminating misinformation—or choose to disregard the law entirely.

Placing Emphasis on Digital Media Literacy

It is critical that internet users improve their digital media literacy to prevent the dissemination of misinformation by specific individuals and organizations.

This is particularly true with the increasing sophistication and accessibility of AI content editors and generators. However, according to Yamin, increasing public digital media literacy will require a coordinated, interdisciplinary effort.

According to him, “Individuals can cultivate skepticism by critically evaluating online content and questioning its source and credibility. “Educators can integrate digital literacy into curricula, teaching students to navigate the digital landscape and recognize AI-generated content responsibly.”

Media platforms such as Meta, according to Yamin, can be of assistance by incorporating educational initiatives such as tutorials on how to identify manipulated media.

In the interim, policymakers can foster public media literacy using financial assistance, curriculum standard advocacy, and strategic partnerships with technology firms.

Although the European Union AI Act and the “AI Bill of Rights” from the Biden administration have laid the groundwork for future AI regulation, it is unlikely that these seeds will germinate for quite some time; until then, web users are responsible for protecting themselves.