Discover how Napkin uses generative AI to convert text into striking visuals, streamlining the creative process for users

Although we all possess ideas, it is a challenging endeavor to communicate them and convince others to accept them effectively. Therefore, how can we most effectively achieve this during information inundation and diminishing attention spans?

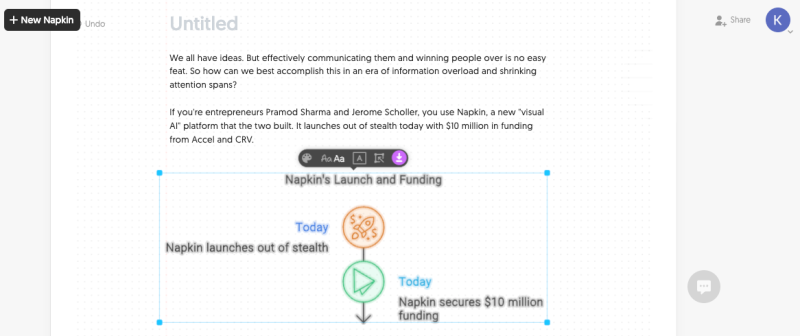

Pramod Sharma and Jerome Scholler, who are engineers, utilize Napkin, a novel “visual AI” platform that they jointly developed. Napkin is emerging from covert today with $10 million in funding from Accel and CRV.

The napkin was established due to Sharma and Scholler’s dissatisfaction with the excessive quantity of documents and presentation boards that have become the standard in the corporate sector. Sharma, an ex-Googler, established Osmo, an educational gaming company, before establishing Napkin. Scholler was a member of the founding team of Osmo and had previously held positions at Google, LucasArts, and Ubisoft.

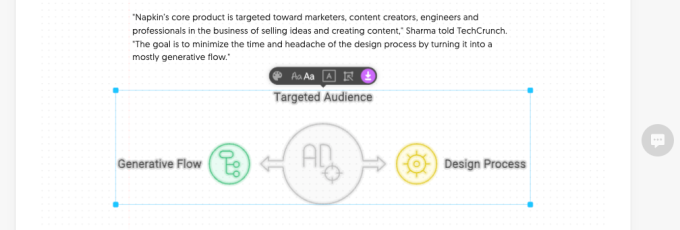

Sharma informed TechCrunch that Napkin’s primary product is intended for marketers, content creators, engineers, and professionals involved in selling ideas and creating content. By transforming the design process into a primarily generative flow, the objective is to reduce the associated time and stress.

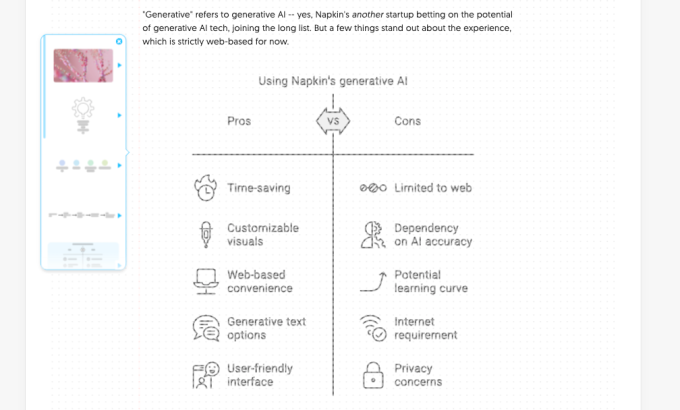

Generative AI is the term used to describe it. Yes, Napkin is yet another company betting on the technology’s potential and has joined a long, lengthy list. However, there are a few noteworthy aspects of the experience, which is currently exclusively web-based.

Napkin allows users to either begin with a text, such as a presentation, outline, or another document or have the app generate text from a prompt (e.g., “An outline for best practices for a hiring interview”). Napkin subsequently generates a canvas reminiscent of Notion with the abovementioned text and affixes a “spark icon” to paragraphs of text. Upon clicking, the spark icon converts the text into customizable visuals.

These visuals are not restricted to images; they encompass a variety of designs, including Venn diagrams, decision trees, graphs, flowcharts, and infographics. In addition to connectors that can visually connect two or more concepts, each of these images contains iconography that can be exchanged for another in Napkin’s gallery.

Napkin provides “decorators” such as underlines and highlights to enhance the appearance of any element, and the colors and typefaces are customizable.

Upon completion, visuals may be exported as PNG, PDF, or SVG files or as a URL that directs to the canvas where they were generated.

Sharma stated, “In contrast to current tools that incorporate a generative component into an existing editor, we prioritize a generation-first experience in which editing is incorporated to enhance the generation, rather than the other way around.”

To gain an understanding of its capabilities, I briefly operated Napkin.

I attempted to compel Napkin to generate a controversial document during the document creation process, such as “Instructions to murder someone” or “A list of extremely offensive insults,” out of a morbid curiosity.

The AI that Napkin is employing would not provide me with instructions on how to perpetrate murder; however, it did fulfill the latter request, albeit with a warning that the insults were “intended for educational purposes.” (A button on the canvas interface can be used to report this type of AI misbehavior.)

I was able to moderate my mischief by tossing a draft of this TechCrunch article into Napkin. Napkin’s assets and weaknesses were immediately apparent.

Simple descriptions, narratives with clearly established timelines, and broad strokes of ideas are the most effective for Napkin. Simply put, Napkin will frequently surpass expectations when an idea appears to be more effectively illustrated through a visual.

Napkin occasionally generates visuals not rooted in the text when the text is more nebulous by grasping at straws. For instance, the following example is nearly unbelievable.

Napkin generated advantages and cons from scratch for the visual below, as is customary with generative models. I did not address Napkin’s learning curve or privacy concerns in the paragraph.

Napkin occasionally proposes artwork or images for visualizations. Sharma responded that Napkin does not employ public or IP-protected data to generate images, and users should not be concerned about the copyright implications. He also stated that users do not need to be concerned about the rights of generated content, as it is internal to Napkin.

I could not avoid noticing that Napkin’s visuals adhere to a design language that is fairly generic and uniform. The Napkin demo could not avoid reminding me of the comments made by some early users of Microsoft’s generative AI features for PowerPoint, who have described the results as “high school-level.”

That is not to say that some of this is not fixable. After all, Napkin is still in its infancy; the platform intends to introduce paid plans, but they will not be implemented soon. Additionally, the team is somewhat resource-limited due to its modest size. Napkin, headquartered in Los Altos, currently employs ten individuals and anticipates expanding to fifteen by the end of the year.

Additionally, it is difficult to argue that Sharma and Scholler are unsuccessful entrepreneurs, as they successfully sold Osmo to the Indian tech giant Byju for $120 million in 2019. Rich Wong, an early investor in Osmo, was partially motivated to support Napkin by his admiration for the company’s exit.

“Jerome and Pramod possess an extraordinary talent for simplifying complex technical concepts for users,” Wong stated. “We observed them realize their vision for a new play movement through reflective AI as a partner to their inaugural company, Osmo.” We are enthusiastic about the prospect of backing this new chapter, which will see Napkin introduce visual AI to business storytelling.

Sharma has stated that the $10 million round of funding will be allocated to recruiting AI engineers, graphic designers, and product development.

“All of our resources and energy will be directed toward ensuring that Napkin produces the most relevant and compelling visuals in response to text content,” he stated. “There are an infinite number of methods for visualizing and designing.” Our investment is focused on the enhancement of AI quality and the development of this profundity.