New Gemini features were unveiled at the Google I/O developer conference held on Tuesday.

Google is integrating its artificial intelligence model Gemini into numerous operations; the AI will soon be available on the company’s smartphones, Gmail, and YouTube.

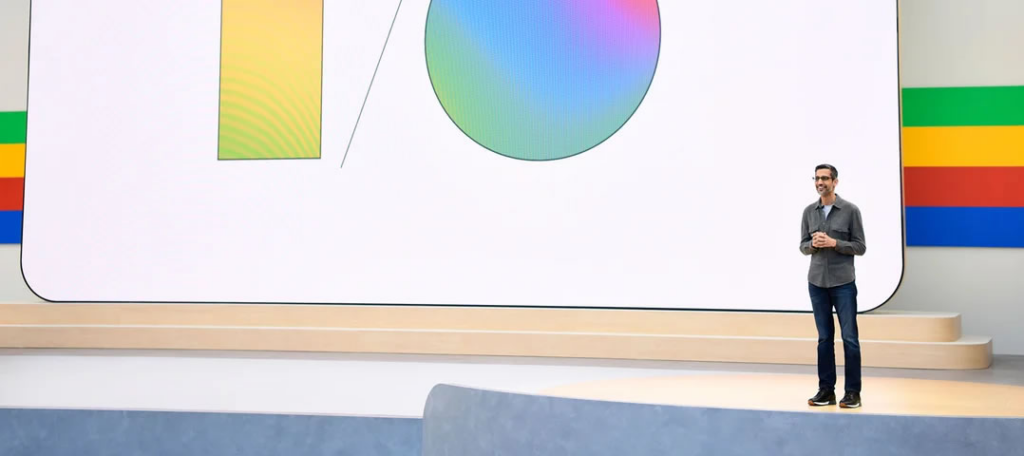

On May 14, during a keynote address at the I/O 2024 developer conference, CEO Sundar Pichai disclosed several forthcoming locations where its AI model will be implemented.

During his 110-minute keynote address, Pichai made 121 references to AI, which dominated the discussion; Gemini, which debuted in December, occupied the foreground.

The subsequent developments that users can anticipate from Google’s integration of the large language model (LLM) into nearly all of its products and services—including Gmail, Android, and Search—are detailed below.

App interactions

Gemini will acquire additional context by enabling interaction with applications. Users can invoke Gemini in an impending update to perform actions within applications, such as pasting an AI-generated image into a message via dragging and dropping.

Additionally, YouTube users can select “Ask this video” to have the AI retrieve particular information from within the video.

The Gemini in Gmail

Users of Google’s email platform, Gmail, can search, summarize, and draft their communications utilizing Gemini, which integrates artificial intelligence.

The AI assistant will be able to respond to emails by performing more intricate duties, such as aiding in processing e-commerce returns through inbox searches, receipt retrieval, and online form completion.

Gemini Live

Gemini Live, a novel experience introduced by Google, enables users to engage in “in-depth” voice conversations with artificial intelligence on their smartphones.

For clarification purposes, the chatbot can be paused mid-answer. Additionally, it can dynamically adjust to the speech patterns of its users. Gemini can also perceive and react to its physical environment through photographs or recordings captured on the device.

Multimodal advancements

Under supervision, Google is developing intelligent AI agents capable of reasoning, planning, and executing complex multi-step tasks on behalf of the user. Multimodal signifies that the AI can process audio, video, and image inputs in addition to text.

Exploration of a new city and the automation of purchasing return processes are early examples and use cases.

Gemini, fully incorporated into the mobile operating system, is slated to replace Google Assistant on Android, among other forthcoming developments for the company’s AI model.

A new “Ask Photos” function enables users to search the photo library using Gemini-powered natural language queries. It can comprehend context, identify persons and objects, and summarize photographic memories in response to inquiries.

Google Maps will feature summaries of locations and regions generated by artificial intelligence using insights extracted from the platform’s mapping data.