Nvidia reported on Wednesday that it generated over $19 billion in net income during the most recent quarter, but this did not provide investors with confidence that its accelerated expansion would persist

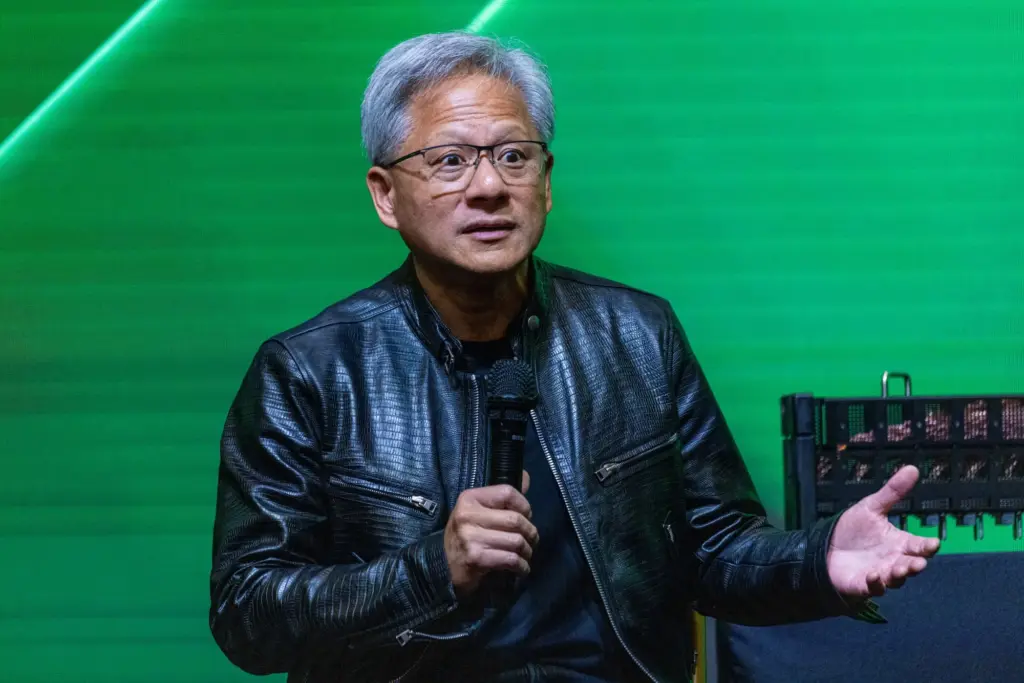

Analysts questioned CEO Jensen Huang during the company’s earnings call regarding the potential impact on Nvidia if technology companies adopt novel techniques to enhance their AI models.

The “test-time scaling” method, which serves as the foundation of OpenAI’s o1 model, was frequently referenced. It is the notion that AI models will provide more accurate responses if they are granted additional time and computing capacity to “think” through inquiries.

Particularly, it augments the computational capacity of the AI inference phase, which encompasses all operations that transpire subsequent to the user’s entry of a command on the prompt.

Nvidia’s CEO was questioned regarding whether he was observing AI model developers transition to these new methods and how Nvidia’s older chips would function for AI inference.

Huang suggested that o1, and test-time scaling in general, could have a more significant impact on Nvidia’s business in the future. He referred to it as “one of the most exciting developments” and “a new scaling law.” Huang made every effort to convince investors that Nvidia is adequately prepared for the transition.

The Nvidia CEO’s comments were consistent with the statement made by Microsoft CEO Satya Nadella at a Microsoft event on Tuesday: o1 is a novel approach for the AI industry to enhance its models.

This is a significant development for the semiconductor industry, emphasizing AI inference’s importance.

Although Nvidia’s processors are the industry standard for training AI models, a diverse array of well-funded startups are developing lightning-fast AI inference chips, including Groq and Cerebras. Nvidia may find it more challenging to compete in this market.

Huang informed analysts that AI model developers are continuing to enhance their models by incorporating additional computing and data during the pretraining phase despite recent reports that advancements in generative models are slowing.

During an onstage interview at the Cerebral Valley summit in San Francisco on Wednesday, Anthropic CEO Dario Amodei also stated that he is not observing a decline in model development.

“The scaling of the foundation model is ongoing,” Huang stated on Wednesday. “As you are aware, this is an empirical law, not a fundamental physical law; however, the evidence suggests that it is continuing to increase in magnitude.” Nevertheless, we are discovering that it is inadequate.

That is undoubtedly the information that Nvidia investors desired, as the chipmaker’s stock has increased by over 180% in 2024 as a result of the sale of AI chips that are used to train the models of OpenAI, Google, and Meta.

Nevertheless, Andreessen Horowitz partners and numerous other AI executives have previously stated that these methods are already beginning to exhibit diminishing returns.

Huang observed that the majority of Nvidia’s computing resources are currently focused on the pretraining of AI models, rather than inference. However, he attributed this to the current state of the AI industry.

He stated that in the future, there will be an increase in the number of individuals operating AI models, which will result in an increase in AI inference. Huang observed that Nvidia is the world’s largest inference platform at present, and the company’s scope and reliability provide it with a significant advantage over startups.

“Our hopes and dreams are that someday, the world does a ton of inference, and that’s when AI has really succeeded,” said Huang. “Everybody knows that if they innovate on top of CUDA and Nvidia’s architecture, they can innovate more quickly, and they know that everything should work.”