The OpenAI firm revealed it has identified and stopped online deceptive influence campaigns exploiting the OpenAI technology.

OpenAI, a provider of artificial intelligence, disclosed that it had detected and thwarted several cyber campaigns that exploited its technology to influence public sentiment on an international scale.

OpenAI, an AI corporation founded by Sam Altman, announced the termination of accounts associated with covert influence operations on May 30.

“In the last three months, we have disrupted five covert IO [influence operations] that sought to use our models in support of deceptive activity across the internet.”

The malicious actors translated and proofread texts, generated comments for articles, and created names and profiles for social media accounts using AI.

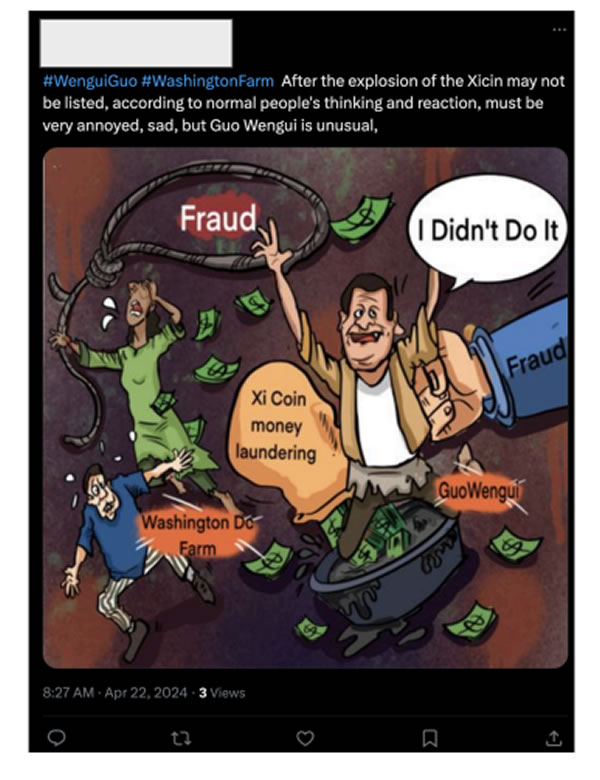

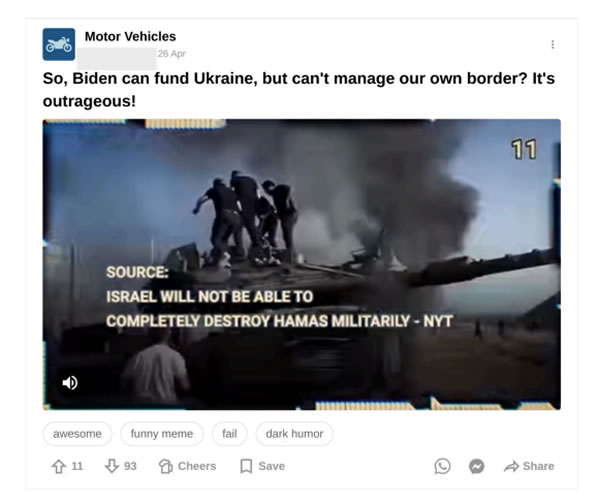

According to the organization responsible for ChatGPT, an operation known as “Spamouflage” utilized OpenAI to conduct social media research and produce multilingual content on platforms including X, Medium, and Blogspot with the intention of “manipulating public opinion or influencing political outcomes.”

AI was also implemented for database and website management and code debugging.

A further operation known as “Bad Grammar” utilized OpenAI models to generate political remarks and operate Telegram bots against the United States, Ukraine, Moldova, and the Baltic States.

Doppelganger, an additional group, generated comments in English, French, German, Italian, and Polish using AI models and posted them on X and 9GAG to manipulate public opinion, the report continued.

An organization known as the “International Union of Virtual Media” utilized the technology mentioned by OpenAI to produce lengthy articles, headlines, and website content that was subsequently published on their affiliated website.

OpenAI also disrupted STOIC, a commercial company that generated articles and comments on social media platforms, including Instagram, Facebook, X, and websites affiliated with the operation, using artificial intelligence, according to the company.

According to OpenAI, the content published by these diverse operations addressed an extensive array of topics:

“Including Russia’s invasion of Ukraine, the conflict in Gaza, the Indian elections, politics in Europe and the United States, and criticisms of the Chinese government by Chinese dissidents and foreign governments.”

“Our case studies provide examples from some of the longest-running and most widely reported influence campaigns currently active,” Ben Nimmo, an OpenAI principal investigator and author of the report, stated to The New York Times.

Additionally, according to the publication, it was the first time a significant AI company disclosed how its tools were utilized for online deception.

“At this time, it does not appear that our services have significantly increased audience engagement or reach for these operations,” OpenAI concluded.