OpenAI’s upcoming AI model, Orion, reached GPT-4-level performance early in training, but the overall performance gains from GPT-4 to GPT-5 appear smaller than previous upgrades, sources report.

According to sources with knowledge of the matter, the performance benefits of OpenAI‘s forthcoming artificial intelligence model are less substantial than those of its predecessors, as reported by The Information.

The Information reports that Orion attained GPT-4 level performance after completing only 20% of its training, as revealed by employee testing.

The quality improvement from GPT-4 to the current iteration of GPT-5 appears to be less significant than that from GPT-3 to GPT-4.

“Some researchers at the company believe Orion isn’t reliably better than its predecessor in handling certain tasks, according to the (OpenAI) employees,” The Information reported. “Orion performs better at language tasks but may not outperform previous models at tasks such as coding, according to an OpenAI employee.”

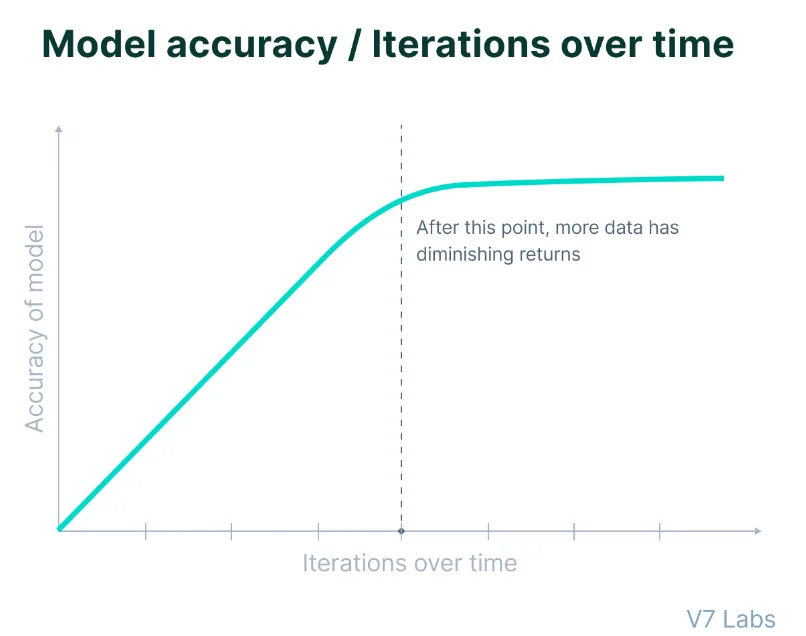

Although some may find it impressive that Orion is approaching GPT-4 at 20% of its training, it is crucial to remember that the initial stages of AI training typically result in the most significant improvements, while subsequent phases produce lesser gains.

Therefore, it is unlikely that the remaining 80% of training time will result in the same level of progress as the previous generational advances, according to sources.

Following the recent $6.6 billion funding round, the limitations of OpenAI become apparent at a critical juncture.

The company is currently contending with technical constraints that present a challenge to traditional scaling approaches in AI development, as well as increased expectations from investors. The company’s forthcoming fundraising endeavors may not be greeted with the same level of enthusiasm as they were previously, which could pose a challenge for a potentially for-profit organization, as Sam Altman appears to desire for OpenAI.

The AI industry is confronted with a fundamental challenge: the dwindling supply of high-quality training data and the necessity of remaining pertinent in a field as competitive as generative AI. The results are underwhelming.

A critical inflection point for traditional development approaches will be reached between 2026 and 2032, as research published in June predicted that AI companies will exhaust the available public human-generated text data.

The research paper asserts that “our findings suggest that the current LLM development trends cannot be sustained through conventional data scaling alone.” This underscores the necessity of alternative methods for model improvement, such as synthetic data generation, transfer learning from data-rich domains, and the utilization of non-public data.

The historical approach of training language models on publicly available text from websites, books, and other sources has reached a point of diminishing returns, as developers have “largely squeezed as much out of that type of data as they can,” as The Information reports.

OpenAI is fundamentally reorganizing its AI development strategy to address these obstacles.

The Information reports that the industry is reportedly refocusing its efforts on enhancing models after their initial training in response to the recent challenge to training-based scaling laws posed by slowing GPT developments. This shift in focus could potentially result in a different type of scaling law.

OpenAI is dividing model development into two distinct tracks in order to accomplish this state of continuous improvement:

The O-Series, which appears to be codenamed “Strawberry,” is a novel approach to model architecture that emphasizes reasoning capabilities. These models are explicitly designed for complex problem-solving tasks and operate with a substantially higher computational intensity.